Camera Payload

The Depth Camera payload began as part of the NASA-funded RESOURCE project, assessing analog extreme environments and how virtual reality can assist explorers and astronauts in the field. Using a combination of RBG and continuous wave time-of-flight imagery, we can capture high-resolution (sub-cm) images of the Moon’s surface. This will be the first time time-of-flight technology will be used to capture this data from the Lunar surface and will help us to: better understand the unique terrain in the south polar region, study in situ resources, such as water-ice and volatiles, and prepare for future human missions.

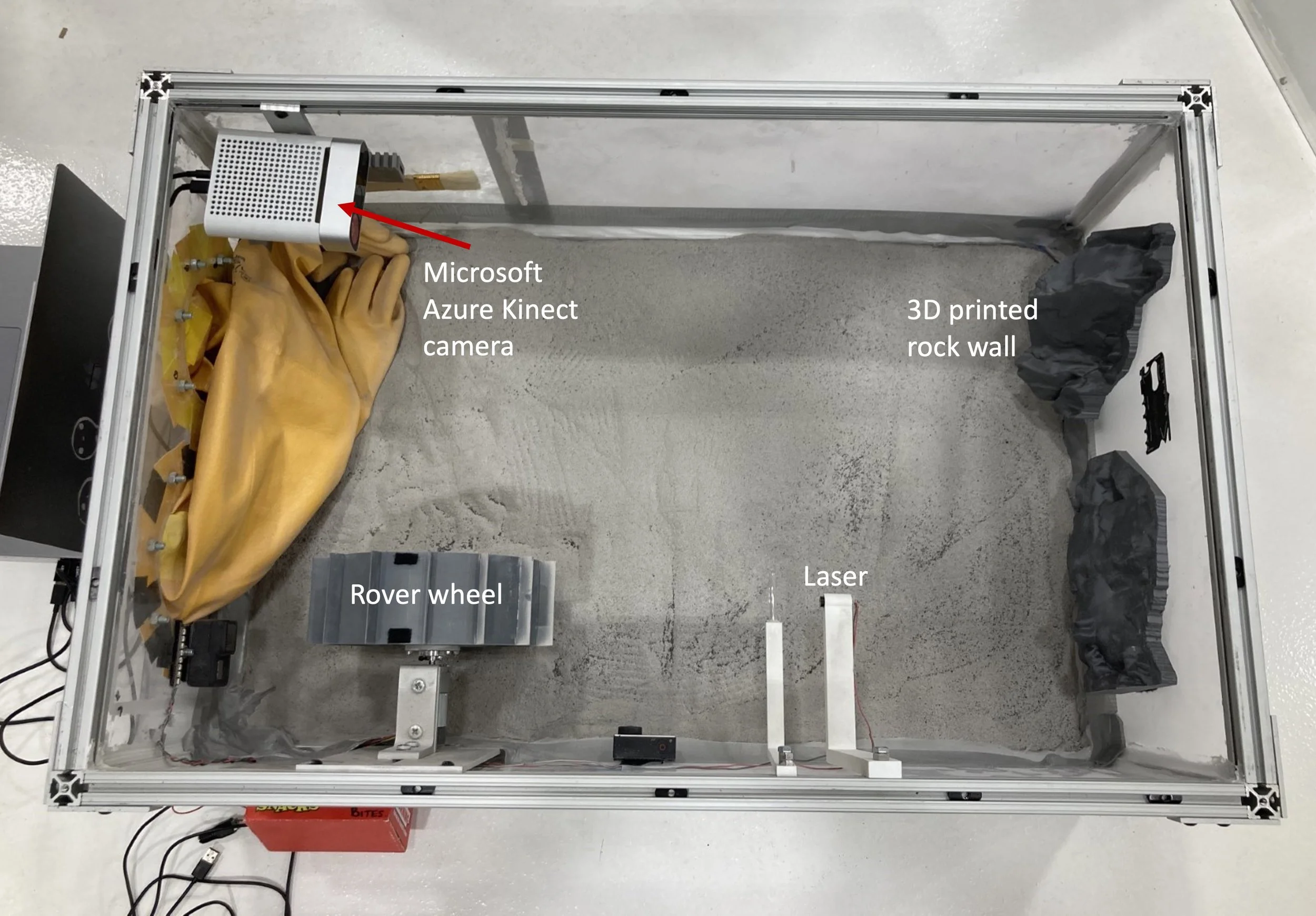

The MIT Media Lab Space Exploration Initiative worked alongside the team at NASA Ames, a Microsoft Azure Kinect was ruggedized and flight qualified for the IM-2 mission. The camera was tested on our May 2022 parabolic flight (covered in the black box for light control tests), including several lunar-g and zero-g parabolas, and field-tested to develop a flexible concept of operations during our October 2022 expedition in Svalbard, Norway and at the SSERVI Lunar Testbed at the NASA Ames Research Centre.

Learn more about Microsoft Azure Kinect for a Lunar Environment

The Microsoft Azure Kinect is a commercial-off-the-shelf (COTS) time-of flight (ToF) depth camera which can provide high resolution near-field depth data that our MIT team has selected to modify for this lunar surface technology demonstration mission. The collected data will be used to construct a high resolution, near-field virtual environment of the lunar surface for scientific applications and to support astronaut field-work training for the historic Artemis III crewed mission. This will provide NASA, MIT researchers, and other stakeholders with an unprecedented visual dataset of the lunar surface; we expect the high resolution imagery to support an entirely novel approach to remote mission control and live EVA (Extra-Vehicular Activity) for moon missions throughout this decade.

The Azure Kinect combines ToF data with RGB video and an IMU sensor to give a complete picture of the area immediately surrounding the device. By using a COTS part with integrated ToF and RGB imaging, we reduce the processing required to align different camera view fields and positions when rendering the image as well as reducing development costs. The unit will be modified for integration with the MAPP-1 rover, including several weight reduction modifications, removal of plastics incompatible with the vacuum environment, and custom changes to the housing and electronics that will integrate the camera as a payload on the Lunar Outpost rover. This project demonstrates two key new approaches for deep space exploration experiments: creative use of COTS products, and a rapid prototyping and innovation approach to enable a novel scientific instrument payload in a matter of months, rather than years.

Cody Paige captures data with the Kinect in Svalbard. Credit: Maggie Coblentz

Cody Paige, Sean Auffinger and Jess Todd working together to fix the mini rover in the field. Field work often consists of troubleshooting, and in remote locations we need to be prepared to do this in challenging conditions. Credit: Maggie Coblentz

The RoverRobotics Mini Rover with the Microsoft Azure Kinect mounted to a custom payload tower. Credit: Maggie Coblentz

Cody Paige operates the RoverRobotics Mini Rover. Credit: Maggie Coblentz

Cody Paige collecting data using the Microsoft Azure Kinect LiDAR/RGB camera for near-field 3D depth data. Credit: Maggie Coblentz

Arduino environmental sensor capturing temperature, luminosity, humidity and sound. Credit: Maggie Coblentz

Cody Paige taking geological field notes for ground-truthing of the captured data. Credit: Maggie Coblentz